Source: Harbin Institute of Technology SCIR

This article is approximately 9300 words long and is recommended for a reading time of 10+ minutes.

This article will discuss the interpretability of the attention mechanism.

Introduction

Since Bahdanau introduced Attention as soft alignment in neural machine translation in 2014, a large number of natural language processing works have adopted it as an important module to improve performance in models. Numerous experiments have shown that the Attention mechanism is computationally efficient and significantly effective. Consequently, discussions and research on its interpretability have emerged. On one hand, people hope to better understand its internal mechanisms to optimize models; on the other hand, some scholars have raised questions about it. Here, as a prospective PhD student in the SCIR laboratory, I have written this reflection note based on my understanding of the Attention mechanism, hoping to inspire readers. Due to personal limitations, any errors in the article are welcome to be pointed out by everyone.

1. Attention Mechanism

1.1 Background

The Attention mechanism is currently one of the most commonly used methods in the field of natural language processing because it can significantly improve model performance across a range of tasks, especially in sequence-to-sequence models based on recurrent neural networks. Coupled with the extensive use of Google’s Transformer model [1], which is entirely based on Attention, and the BERT model [2], the Attention mechanism has become a textbook-worthy technique. On one hand, Attention intuitively simulates the behavior of humans concentrating on certain keywords when understanding language, just as Bahdanau [3] introduced it as soft alignment in neural machine translation. On the other hand, numerous experiments over the years have shown that Attention is indeed a feasible and efficient method for enhancing model performance. Therefore, further exploring the intrinsic principles of this mechanism, explaining its effectiveness, and providing proof is a valuable research direction.

1.2 Structure

Although different papers may implement the Attention mechanism in various ways, they generally follow the same paradigm. It consists of three components:

-

Key corresponds to the value and is used to compute similarity with the query, serving as the basis for selecting Attention;

-

Query is the query during a single execution of Attention;

-

Value is the data that is attended to and selected.

The corresponding formula is as follows:

Where the value (Value) is often the output from the previous layer and generally remains unchanged. The other components, such as key, query, and similarity function, often have different implementation methods. Here we first introduce the Attention structure implemented by Bahdanau [3] and Yang [7], as the explorations of interpretability by Serrano [4] and Jain [5] are also based on this.

The formula for Attention is as follows:

(As Value) is the output tensor at the i-th position from the previous layer; if the previous layer is a bidirectional RNN structure, then

(As Value) is the output tensor at the i-th position from the previous layer; if the previous layer is a bidirectional RNN structure, then ;

;  is the key (Key) at the i-th position, calculated from the value (Value) through a fully connected layer;

is the key (Key) at the i-th position, calculated from the value (Value) through a fully connected layer;  is the query (Query) of this Attention layer, randomly initialized and synchronized during training. If Attention is not a standalone layer but is built on the decoder, then

is the query (Query) of this Attention layer, randomly initialized and synchronized during training. If Attention is not a standalone layer but is built on the decoder, then  is related to the output at the corresponding position during the encoding phase; the similarity function is the matrix dot product, and the resulting

is related to the output at the corresponding position during the encoding phase; the similarity function is the matrix dot product, and the resulting  is the Attention weight, and

is the Attention weight, and  is the output of the Attention layer.

The core idea is as follows:

is the output of the Attention layer.

The core idea is as follows:

-

Calculate a non-negative normalized weight for each input element;

-

Multiply these weights by the corresponding components’ representations;

-

Sum the results to produce a fixed-length representation.

This is the original form of Attention, and the experimental tests on its interpretability are also based on this model.

2. Definition of Interpretability

There are various definitions of interpretability, and the differences often arise from here, leading to different conclusions. However, there is some consensus that can be summarized.

The conceptual consensus on interpretability can be described as follows: If a model is interpretable, it means that humans can understand the model, which can be divided into two aspects: one is that the model is transparent to humans [9], allowing the prediction of corresponding parameters and model decisions before training on specific tasks; the other is that after the model makes a decision, humans can understand the reasons behind that decision. There are also other definitions from different perspectives, such as: interpretability means being able to reconstruct the model’s decision-making process manually.

Specifically, for the interpretability of the Attention model, it can generally be refined into:

-

The magnitude of Attention weights should be positively correlated with the importance of the corresponding positional information;

-

Input units with high weights should have a decisive effect on the output results.

3. Specific Arguments

3.1 Not Explanation

Regarding the lack of interpretability, Serrano [4] and Jain [5] each proposed some experiments and arguments. Their work overlaps and complements each other; the former explores a shallower level, only considering whether the Attention layer’s weights are positively correlated with the corresponding input positions, using the method of erasing intermediate representations, i.e., continuously nullifying some weights to observe changes in the model. The article mainly explores different erasure methods, while the latter’s work is slightly deeper, exploring not only the impact of removing key weights on the model but also introducing the idea of constructing adversarial Attention weights to test changes in the model.

3.1.1 Intermediate Representation Erasure

Intermediate representation erasure considers a relatively shallow understanding of Attention, based on the logic that the more important the weight, the greater the impact on the output result, and nullifying it will directly affect the result.

First, introduce evaluation metrics, namely how much the weights and output results change:

-

Total Variance Distance (TVD) as an indicator of the difference in output result distributions, defined as:

Where

and

and  are two different output result distributions.

are two different output result distributions.

-

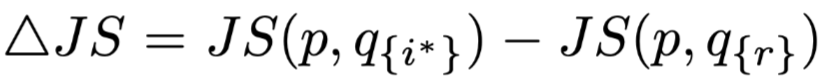

Jensen-Shannon Divergence (JSD) as an indicator of the difference in output result distributions and Attention weights, defined as:

Where

and

and  are two different distributions, which can be either output results or Attention weights,

is Kullback-Leibler Divergence.

are two different distributions, which can be either output results or Attention weights,

is Kullback-Leibler Divergence.

The specific implementation is shown in Figure 1, where the model is divided into two parts: the embedding and encoding part, for example, implementing word embeddings using a fully connected layer and encoding with a bidirectional LSTM; various models used in the experiment differ. The second part is the decoding part, where the output tensor obtained from the Attention layer is decoded into the results needed for specific tasks. In text classification tasks, it is a dimensional transformation implemented by a fully connected layer. It is important to note that the Attention layer does not function as in seq-to-seq models on the decoder but is tested as an independent layer.

Figure 1 Overall model of intermediate representation erasure

The entire model runs twice: the first time with normal input and output, retaining the results obtained; the second time, the selected weights on the Attention layer are set to zero, and the weights are re-normalized using Softmax, continuing the subsequent process to obtain results, and calculating the TVD metric with the data obtained the first time. Erasing on the Attention layer rather than at the input end is to isolate its impact from the preceding encoding part. Additionally, the purpose of renormalizing is that when the model nullifies some high-weight parameters, it causes the output tensor of the Attention layer to approach zero, which is a situation the model has not encountered during training, leading to uncontrollable decision-making behavior.

Serrano [4] designed experiments using text classification tasks and selected four datasets, as shown in Figure 2, and also designed four different models for comparative testing:

Figure 2 Four text classification datasets

-

Hierarchical Attention Network proposed by Yang [7], as shown on the left side of Figure 3, divided into word-level and sentence-level parts, with the experiment only testing the sentence-level Attention layer, while the previous parts are regarded as the encoding phase;

-

Flat Attention Network, modified from the previous model, only has a word-level Attention layer operating on the entire document without sentence separation;

-

Flat Attention Network with CNN replaces the bidirectional RNN structure in the previous model with a CNN, as shown on the right side of Figure 3, referencing Kim’s implementation [8];

-

No Encoder does not use an encoder, directly feeding the results after word embedding into the Attention layer. This control group is designed to eliminate the encoder and prevent individual tokens from obtaining contextual information.

Figure 3 Structures of Hierarchical Attention Networks using BiRNN and CNN as encoding parts

The experiments mainly consist of two modules: single weight nullification and group weight nullification. The difference lies in that the former tests the change in the entire model’s output after nullifying the intermediate representation corresponding to the highest weight, while the latter tests how many intermediate representations need to be nullified and how to nullify them to change the model’s final decision, thus finding evidence for interpretability from the experimental data.

Single Attention Weight’s Importance first nullifies the intermediate representation corresponding to the highest Attention weight, using the process shown in Figure 1 to calculate the normal result and the result after nullification for the JSD metric , where

, where  is the distribution of the normal result,

is the distribution of the normal result,  is the result distribution after nullifying the highest weight’s corresponding position. To verify how much this distance is, a random position is re-selected

is the result distribution after nullifying the highest weight’s corresponding position. To verify how much this distance is, a random position is re-selected , and the same process is used to nullify the intermediate representation, obtaining the corresponding JSD metric

, and the same process is used to nullify the intermediate representation, obtaining the corresponding JSD metric . At this point, we can use

. At this point, we can use  for comparison. Intuitively, if the high-weight items are indeed more important, this formula should always be positive, and the larger the difference between the two nullified weights

for comparison. Intuitively, if the high-weight items are indeed more important, this formula should always be positive, and the larger the difference between the two nullified weights , the larger the value should be. The charts presented by the author in the article, as shown in Figure 4 on the left, have the x-axis as the difference between the two weights and the y-axis as

, the larger the value should be. The charts presented by the author in the article, as shown in Figure 4 on the left, have the x-axis as the difference between the two weights and the y-axis as  value. The author states that there are almost no negative results in the data obtained, and even if there are, they tend to be close to zero. Additionally, as the difference between the two weights increases, the degree of change in the model’s output distribution also increases correspondingly. However, the author believes that “even if the difference between the two weights reaches a large extent of 0.4 (weights satisfy sum normalization), most positive

value. The author states that there are almost no negative results in the data obtained, and even if there are, they tend to be close to zero. Additionally, as the difference between the two weights increases, the degree of change in the model’s output distribution also increases correspondingly. However, the author believes that “even if the difference between the two weights reaches a large extent of 0.4 (weights satisfy sum normalization), most positive  values are still very close to zero,” hence preliminarily concluding that the Attention mechanism has phenomena contrary to intuition.

However, results from the article’s appendix show that the results of non-hierarchical models without encoding layers, as shown in Figure 4 on the right, indicate that the issue of the degree of change being irrelevant is not as severe as suggested. In other words, under this group of data, the author’s conclusion is not rigorous. The article proposes a hypothesis that when the encoding layer encodes each token at each position, it includes contextual information, meaning that the amount of information has undergone a similar averaging operation. The output of each token from the encoding layer contains, to some extent, the information that needs to be attended to, thus weakening the impact of the Attention layer’s weights. Of course, the article does not conduct experiments to verify this hypothesis.

Figure 4 Comparison of results based on hierarchical models using BiRNN and non-hierarchical models without encoding layers

Subsequently, Serrano [4] designed a series of experiments to strengthen the exploration of the impact of single nullification operations, using the evaluation metric of the probability of model decision reversal, i.e., nullifying the highest weight corresponding position

values are still very close to zero,” hence preliminarily concluding that the Attention mechanism has phenomena contrary to intuition.

However, results from the article’s appendix show that the results of non-hierarchical models without encoding layers, as shown in Figure 4 on the right, indicate that the issue of the degree of change being irrelevant is not as severe as suggested. In other words, under this group of data, the author’s conclusion is not rigorous. The article proposes a hypothesis that when the encoding layer encodes each token at each position, it includes contextual information, meaning that the amount of information has undergone a similar averaging operation. The output of each token from the encoding layer contains, to some extent, the information that needs to be attended to, thus weakening the impact of the Attention layer’s weights. Of course, the article does not conduct experiments to verify this hypothesis.

Figure 4 Comparison of results based on hierarchical models using BiRNN and non-hierarchical models without encoding layers

Subsequently, Serrano [4] designed a series of experiments to strengthen the exploration of the impact of single nullification operations, using the evaluation metric of the probability of model decision reversal, i.e., nullifying the highest weight corresponding position and randomly selected positions

and randomly selected positions to observe the situation of model decision behavior reversal, obtaining experimental data as shown in Figure 5, where the orange area indicates the cases that led to reversal, while the blue area indicates the opposite. However, in most cases, the model decision does not reverse, meaning that this experiment is somewhat ineffective, or further, simply nullifying an intermediate representation does not affect the robustness of the Attention layer, especially when there is an encoder with contextual relevance.

Figure 5 Situation of model decision reversal

Jain [5] also designed a group of leave-one-out experiments, using a model framework similar to Serrano [4], but with an increased dataset. It involves text classification, question answering, and natural language inference tasks, with datasets as shown in Figure 6, where text classification tasks are modified into binary classification tasks to simplify model requirements.

Figure 6 Datasets for text classification, question answering, and natural language inference

Jain [5] believes that the Attention weights learned by the model should correspond to the importance evaluation metrics of feature representations, comparing two evaluation metrics: the model output results after nullifying different positions’ intermediate representations and the original output results, and a set of TVD values obtained from gradient backpropagation for each position. The rationale for the former is that if an important intermediate representation is nullified, the change in the model’s decision result will be significant, leading to a large corresponding TVD value, while the latter relates to the gradient values and the focus of the model’s decision-making. By calculating the JSD for these two metrics with Attention weights, the corresponding distributions are obtained as shown in Figure 7, where only the data for the gradient metric is displayed. Here, JSD is transformed into correlation, with 0 indicating no correlation and 1 indicating complete consistency. The data for the other metric is similar, so it will not be elaborated further. Basically, simply nullifying an intermediate representation in the experiments cannot serve as a strong argument to prove whether Attention is interpretable.

to observe the situation of model decision behavior reversal, obtaining experimental data as shown in Figure 5, where the orange area indicates the cases that led to reversal, while the blue area indicates the opposite. However, in most cases, the model decision does not reverse, meaning that this experiment is somewhat ineffective, or further, simply nullifying an intermediate representation does not affect the robustness of the Attention layer, especially when there is an encoder with contextual relevance.

Figure 5 Situation of model decision reversal

Jain [5] also designed a group of leave-one-out experiments, using a model framework similar to Serrano [4], but with an increased dataset. It involves text classification, question answering, and natural language inference tasks, with datasets as shown in Figure 6, where text classification tasks are modified into binary classification tasks to simplify model requirements.

Figure 6 Datasets for text classification, question answering, and natural language inference

Jain [5] believes that the Attention weights learned by the model should correspond to the importance evaluation metrics of feature representations, comparing two evaluation metrics: the model output results after nullifying different positions’ intermediate representations and the original output results, and a set of TVD values obtained from gradient backpropagation for each position. The rationale for the former is that if an important intermediate representation is nullified, the change in the model’s decision result will be significant, leading to a large corresponding TVD value, while the latter relates to the gradient values and the focus of the model’s decision-making. By calculating the JSD for these two metrics with Attention weights, the corresponding distributions are obtained as shown in Figure 7, where only the data for the gradient metric is displayed. Here, JSD is transformed into correlation, with 0 indicating no correlation and 1 indicating complete consistency. The data for the other metric is similar, so it will not be elaborated further. Basically, simply nullifying an intermediate representation in the experiments cannot serve as a strong argument to prove whether Attention is interpretable.

Figure 7 Correlation between Attention distribution and gradient distribution

The idea of Importance is to find a minimal intermediate representation nullification set that can make the model’s decision reverse; if the Attention weights for the positions in this set are the largest, it indicates that the ranking of Attention weights regarding the importance of intermediate representations is reasonable. The author proposes the following process for verification:

Figure 7 Correlation between Attention distribution and gradient distribution

The idea of Importance is to find a minimal intermediate representation nullification set that can make the model’s decision reverse; if the Attention weights for the positions in this set are the largest, it indicates that the ranking of Attention weights regarding the importance of intermediate representations is reasonable. The author proposes the following process for verification:

-

Add Attention weights in descending order to the nullification set;

-

If the model’s decision reverses, stop extending the set;

-

Check if there is a proper subset that can also reverse the model’s decision.

However, the problem here is that finding such a proper subset requires exponential time complexity, which is almost unachievable. The requirements can only be relaxed to find a smaller arbitrary set. The author designed three other sorting schemes to test whether the number of elements needed to nullify to reverse the model’s decision is smaller than that using Attention weight sorting. The three sorting schemes are as follows:

-

-

Sorting based on the size of the gradient values backpropagated to the Attention layer;

-

Sorting based on the product of gradient values and Attention weights.

These three candidate sortings are not aimed at finding a golden-standard nullification combination but rather to compare with the sorting formed by Attention weights. Additionally, some data items need to be discarded to prevent damaging the experimental effect, such as in some cases, even if only one intermediate representation is left after nullification, the model’s decision does not reverse; or if the input sequence length is 1, sorting is impossible. The experimental results are shown in Figure 8, where each rectangle represents the value range of data, and the horizontal line in the rectangle represents the mean of the data. If no horizontal line is indicated, it means the mean is close to 1. In most cases, the effect of random sorting is far worse than the other three methods, while the data range of the other three sorts overlaps, but in terms of mean performance, generally, the gradient value multiplied by Attention weights performs best, followed by sorting by gradient values, and the worst is sorting by Attention weights.

Figure 8 Proportion of Attention weights nullified to reverse model decision under different sorting rules

The conclusion drawn by the author is that Attention does not maximize the description of the model’s decision behavior, and using Attention weights as a basis is effective but not optimal. However, the issue here is that the correlation between nullifying Attention weights and the reversal of model decisions is merely an intuitive hypothesis and not a strong prior or axiom.

Figure 8 Proportion of Attention weights nullified to reverse model decision under different sorting rules

The conclusion drawn by the author is that Attention does not maximize the description of the model’s decision behavior, and using Attention weights as a basis is effective but not optimal. However, the issue here is that the correlation between nullifying Attention weights and the reversal of model decisions is merely an intuitive hypothesis and not a strong prior or axiom.

3.1.2 Constructing Adversarial Weights

Jain [5] believes that changes in Attention weights should correspondingly affect the model’s prediction results; this idea is similar to the reversal of model decisions, but the difference is that here the goal is to construct a set of counterintuitive weight distributions to deceive the model into making the same decision, thereby proving that the Attention mechanism is unreliable.

The adversarial effect the author aims to construct is shown in Figure 9, displaying a negative review example. On the left is the original weight learned by the model, where the maximum weight corresponds to the word waste, followed by asking, and the classifier gives a result of 0.01 (0 indicates negative, 1 indicates positive, and 0.5 indicates neutral), which aligns with human judgment of the sentence’s sentiment polarity. The right side shows the constructed adversarial weights, where the maximum weight corresponds to the word was, followed by myself, and such counterintuitive Attention methods can still yield the same result from the classifier.

Figure 9 Example of text sentiment analysis

The author designed two methods to conduct experiments. The first is to simply randomize the positions of the original Attention weights, and the second is to design a target function to train adversarial weights that yield a model result distribution similar to the original while differing as much as possible in Attention weight distribution.

Attention Permutation randomizes the Attention weights and observes changes in the model output results, using the TVD metric to evaluate the degree of change. Due to randomness and computational complexity limitations, only 100 groups of weights are randomly selected for each sample during the experiment, and the median of the TVD metrics is taken for evaluation. As shown in Figure 10, this is a heatmap, where the x-axis represents the median degree of change in model output, and the y-axis represents the maximum value of the original Attention weights, with different colors representing different categories. The author aims to verify whether only a few features are needed to explain an output result by classifying according to the range of maximum Attention weights. The author uses the SST dataset as a standard, concluding that randomizing Attention does not significantly affect the model’s output results. However, this conclusion should be debated, as the author attributes the inconsistency in experimental results solely to the dataset. For example, in the Diabetes dataset, only a few key features can decisively influence the results. In fact, when multiple datasets exhibit different or even completely opposite results, selecting a dataset that aligns with one’s expectations as a standard is biased, and it is not advisable to draw conclusions without in-depth research and experimentation.

Figure 10 Changes in model output results after randomizing weights

Adversarial Attention is the author’s main experiment, constructing a method to generate adversarial weights, aiming to explicitly find a weight distribution or set that is as different as possible from the original Attention weights while effectively ensuring that the model prediction results remain unchanged. The mathematical expression of the construction method is as follows:

Figure 10 Changes in model output results after randomizing weights

Adversarial Attention is the author’s main experiment, constructing a method to generate adversarial weights, aiming to explicitly find a weight distribution or set that is as different as possible from the original Attention weights while effectively ensuring that the model prediction results remain unchanged. The mathematical expression of the construction method is as follows:

Where

Where  represents a precise definition of a small value, i.e., using the constructed weights to make the model’s decision and the decision using original weights to achieve the maximum TVD distance;

represents a precise definition of a small value, i.e., using the constructed weights to make the model’s decision and the decision using original weights to achieve the maximum TVD distance;  is the model result obtained using the original weights.

is the model result obtained using the original weights. represents the weight distribution of the i-th group constructed, while

represents the weight distribution of the i-th group constructed, while  is the model result obtained using the i-th group of constructed weights. The optimization objective is to generate the Attention weights of the groups while ensuring that the results produced by each group of weights do not exceed the TVD distance, maximizing the JS distance between each group of weights and the original weights, as well as between each group of weights themselves. The specific implementation of the optimization objective is

is the model result obtained using the i-th group of constructed weights. The optimization objective is to generate the Attention weights of the groups while ensuring that the results produced by each group of weights do not exceed the TVD distance, maximizing the JS distance between each group of weights and the original weights, as well as between each group of weights themselves. The specific implementation of the optimization objective is , where λ is a hyperparameter set to 500 during training.

, where λ is a hyperparameter set to 500 during training.

Figure 11 Histogram of JS distance distributions under constraints

The author continues to consider the relationship between the maximum Attention weight value (to consider the effect of feature effectiveness, i.e., the larger the maximum weight, the more the model focuses on certain features) and the maximum JS distance of the adversarial weights that can be constructed. Intuitively, if the distribution of the original Attention weights is steeper, the difficulty of generating effective (large JSD values) adversarial weights will also increase. As shown in Figure 12, this is also a heatmap, where the x-axis represents the maximum JS distance and the y-axis represents the maximum original Attention weight. The author believes that although there is indeed a previously mentioned trend, it is undeniable that there are indeed many examples in each dataset where the original Attention weights are high while still being able to construct adversarial weights with large JS distances. In other words, the idea that a specific set of features can completely influence the results is misleading.

Figure 11 Histogram of JS distance distributions under constraints

The author continues to consider the relationship between the maximum Attention weight value (to consider the effect of feature effectiveness, i.e., the larger the maximum weight, the more the model focuses on certain features) and the maximum JS distance of the adversarial weights that can be constructed. Intuitively, if the distribution of the original Attention weights is steeper, the difficulty of generating effective (large JSD values) adversarial weights will also increase. As shown in Figure 12, this is also a heatmap, where the x-axis represents the maximum JS distance and the y-axis represents the maximum original Attention weight. The author believes that although there is indeed a previously mentioned trend, it is undeniable that there are indeed many examples in each dataset where the original Attention weights are high while still being able to construct adversarial weights with large JS distances. In other words, the idea that a specific set of features can completely influence the results is misleading.

Figure 12 Relationship between JS distance and maximum original Attention weight distribution

To summarize the above conclusions, Serrano [4] designed multiple experiments around intermediate representation erasure, observing changes in model decisions, concluding that: ‘Attention does not necessarily correspond to importance.’ because in experiments, Attention often fails to successfully identify the most important intermediate representations that can affect model decisions. Additionally, there is a significant but untested hypothesis: ‘Attention magnitudes do seem more helpful in uncontextualized cases.’ This suggests that contextualized encoders may lead to the Attention mechanism being difficult to interpret, but the author has not conducted in-depth research on this.

Jain [5] raised two questions based on the contribution of Attention to the model’s transparency [9]:

Figure 12 Relationship between JS distance and maximum original Attention weight distribution

To summarize the above conclusions, Serrano [4] designed multiple experiments around intermediate representation erasure, observing changes in model decisions, concluding that: ‘Attention does not necessarily correspond to importance.’ because in experiments, Attention often fails to successfully identify the most important intermediate representations that can affect model decisions. Additionally, there is a significant but untested hypothesis: ‘Attention magnitudes do seem more helpful in uncontextualized cases.’ This suggests that contextualized encoders may lead to the Attention mechanism being difficult to interpret, but the author has not conducted in-depth research on this.

Jain [5] raised two questions based on the contribution of Attention to the model’s transparency [9]:

-

How much correlation is there between Attention weights and feature importance metrics?

-

Do different weight distributions necessarily lead to different model decisions?

The answers given are as follows:

-

‘Only weakly and inconsistently,’ the correlation between Attention weights and feature importance metrics (gradient distributions, degree of change in model results after nullifying an intermediate representation) is not significant and unstable;

-

‘It is very possible to construct adversarial attention distributions that yield effectively equivalent predictions.’ It is easy to construct adversarial weights that focus on features completely different from the original weights, and furthermore, random weight settings often do not significantly affect the model’s decision results.

3.2 Not Not Explanation

This section titled Not Not Explanation is a rebuttal to the previous arguments about the lack of interpretability of the Attention mechanism, rather than proving that Attention is interpretable. This mainly references Wiegreffe [6]’s rebuttal to Jain [5]. Wiegreffe [6] provides two main reasons:

-

Attention Distribution is not a Primitive. The distribution of Attention weights does not exist independently; due to the entire process of forward and backward propagation, the parameters of the Attention layer cannot be isolated from the model, otherwise, it loses practical significance;

-

Existence does not Entail Exclusivity. The existence of Attention distributions does not imply exclusivity; Attention only provides an explanation, not the only explanation. Especially when the dimensionality of the intermediate representation vectors is large while the output categories are few, the dimensionality reduction mapping functions can easily have significant flexibility.

The author designed four control experiments to counter this, which can be referenced in Figure 13. The right side shows the model used, which is consistent with the previously introduced model, including embedding layers, encoding layers, and independent Attention layers, with the task also being text classification. The left side corresponds to the scope of each experiment, J&W refers to Jain [5]’s experiment, which only involves modifying Attention weights. It is important to note that in the figure, Attention Parameters represent the parameters trained in the Attention layer, and the final α weights are calculated from them. §3.2 is the experiment designed by Wiegreffe [6] in the third section of their paper, with the brackets indicating the layers involved in each experiment. From right to left, the four experiments are:

-

Uniform as Adversary: Train a baseline model with fixed Attention weights set to the mean;

-

Variance with a Model: Reinitialize and retrain the model with a random seed, serving as a normal baseline for Attention weight distribution deviation;

-

Diagnosing Attention Distribution by Guiding Simpler Models: Implement a diagnostic framework using fixed pre-trained Attention weights;

-

Training an Adversary: Design an end-to-end adversarial weight training method, noting that this is not an independent experiment but a concrete implementation of adversarial weight generation from the previous experiment.

Figure 13 Model results and control experiment sections used

Uniform as Adversary: The purpose of this experiment is to verify whether the Attention mechanism is necessary across various datasets, because if the task itself is too simple and does not require Attention to achieve good results, then this dataset lacks persuasiveness. Conversely, if Attention is necessary, its removal will lead to a significant decline in model performance. The experiment design is straightforward: directly set the Attention weights to the mean and freeze them, training only the other parts, with all intermediate representations passed from the encoding layer directly calculated as the output of the Attention layer. The F1 metrics for text classification obtained are shown in Figure 14, where the leftmost is the result provided by Jain [5], the middle is the result replicated by Wiegreffe [6], and the rightmost is the result obtained from this experiment. Visually, it can be seen that for most datasets, the effect of using Attention does not significantly improve performance, especially for the latter two datasets, which show almost no increase. The author summarizes that ‘Attention is not explanation if you don’t need it.’

Figure 14 F1 metrics for text classification tasks

Variance with a Model: The author uses random seeds to reinitialize and train the model, obtaining different Attention weights that are regarded as normal weights, which are simply disturbed by noise rather than artificially intervened adversarial weights. By calculating the JSD metric between these weights and the original weights, a normal baseline is obtained, where weights exceeding this baseline are considered abnormal. As shown in Figure 15, this is a comparative chart, with the x-axis representing the JS distance and the y-axis representing the range of maximum Attention weights. From a to d, each represents the baseline generated from random seeds across various datasets, while e and f are the experimental data from Jain [5]’s adversarial weights. It can be seen that the generated weights on the SST dataset have a JSD distance far exceeding the baseline. This indicates that artificially constructed adversarial data is indeed abnormal, significantly exceeding the level of noise. This result aligns with the phenomenon in the previous experiment, where the less Attention contributes, the more unpredictable its weight distribution becomes. However, thus far, this remains a theoretical discussion without concrete data explaining the situation of the constructed Attention weights.

Figure 14 F1 metrics for text classification tasks

Variance with a Model: The author uses random seeds to reinitialize and train the model, obtaining different Attention weights that are regarded as normal weights, which are simply disturbed by noise rather than artificially intervened adversarial weights. By calculating the JSD metric between these weights and the original weights, a normal baseline is obtained, where weights exceeding this baseline are considered abnormal. As shown in Figure 15, this is a comparative chart, with the x-axis representing the JS distance and the y-axis representing the range of maximum Attention weights. From a to d, each represents the baseline generated from random seeds across various datasets, while e and f are the experimental data from Jain [5]’s adversarial weights. It can be seen that the generated weights on the SST dataset have a JSD distance far exceeding the baseline. This indicates that artificially constructed adversarial data is indeed abnormal, significantly exceeding the level of noise. This result aligns with the phenomenon in the previous experiment, where the less Attention contributes, the more unpredictable its weight distribution becomes. However, thus far, this remains a theoretical discussion without concrete data explaining the situation of the constructed Attention weights.

Figure 15 Comparison of weight distributions based on random seed initialization and adversarial weight distributions to original distributions’ JS distances

Diagnosing Attention Distribution by Guiding Simpler Models: This model differs from the previous in that it aims to more accurately test Attention weight distributions, eliminating the influence of contextualized structures. Therefore, the LSTM encoding layer used previously is removed, and only an MLP is used to complete the affine transformation from the embedding layer to the classification results. Afterward, the pre-set, untrained Attention weights are directly used to obtain the model decision results through weighted summation, as shown in Figure 16. The author designs four control experiments based on this:

Figure 15 Comparison of weight distributions based on random seed initialization and adversarial weight distributions to original distributions’ JS distances

Diagnosing Attention Distribution by Guiding Simpler Models: This model differs from the previous in that it aims to more accurately test Attention weight distributions, eliminating the influence of contextualized structures. Therefore, the LSTM encoding layer used previously is removed, and only an MLP is used to complete the affine transformation from the embedding layer to the classification results. Afterward, the pre-set, untrained Attention weights are directly used to obtain the model decision results through weighted summation, as shown in Figure 16. The author designs four control experiments based on this:

-

The pre-set Attention weights are set to the mean, serving as a baseline;

-

Weights are not fixed, allowing them to be trained alongside the MLP;

-

Directly using the original Attention weights used when employing LSTM as the encoder;

-

Using adversarially generated Attention weights (implemented in the next section).

Figure 16 Model using pre-set Attention weights

The results of the four control experiments are shown in Figure 17. Replacing the original Attention weights with weights trained alongside the MLP leads to improved performance. Additionally, in the first experiment, on datasets where Attention weights are significant, the performance of adversarial weights is far lower than that of original weights. This indicates that the importance information of tokens encoded by the Attention mechanism is not independent of the model; it can be transferred to other models using the same dataset. However, the constructed adversarial weights do not discover additional information about the data but rather exploit specific vulnerabilities of the model (where model capability exceeds task requirements).

Figure 17 F1 metrics obtained from four control experiments

Train an Adversary: This is the specific model for generating adversarial weights, differing mainly in the loss function from Jain [5]’s proposed model:

, where

, where  is the model using original weights, and

is the model using original weights, and  is the model using adversarial weights. As shown in Figure 18, it is indeed possible to obtain effective adversarial weights, but it has also been proven in the previous experiment that these weights merely exploit vulnerabilities of the model and do not discover more important information.

is the model using adversarial weights. As shown in Figure 18, it is indeed possible to obtain effective adversarial weights, but it has also been proven in the previous experiment that these weights merely exploit vulnerabilities of the model and do not discover more important information.

4. Conclusion

The interpretability of the Attention mechanism is currently a hot topic of discussion. Many authors have designed various experiments to prove or disprove it, but there are always some incompleteness issues, such as dataset problems, model design issues, and sometimes even discrepancies in the definition of interpretability. Nevertheless, step by step, solid exploration is always necessary. Whether it is the intermediate representation erasure experiments designed by Serrano [4] or the adversarial weight generation experiments designed by Jain [5], they ultimately aim to find examples where Attention weights do not correctly express token importance. Both perspectives are limited to the Attention weights themselves, overlooking many factors that significantly influence results, such as whether the dataset requires the Attention mechanism, and the impact of contextual encoders on Attention weights, all of which could undermine the experiments’ effectiveness. Wiegreffe [6] has strongly rebutted Jain [5]’s experimental design flaws, but the reliability of the method designed to transfer Attention weights remains to be debated.

In addition, many questions have only been raised but not explored:

-

What types of tasks require the Attention mechanism, and is it universal?

-

What impact does the selection of encoding layers have on Attention?

-

Is it reasonable to transfer Attention weights between different models on the same dataset?

-

How do the complexities of models and datasets affect the ease of constructing adversarial weights?

Furthermore, Serrano [4] has also proposed some prospects. Currently, research is still limited to the importance based on Argmax; everyone only considers the impact of Attention weights on the final decision results of the model. However, this is not all, as there may also be features that decrease the probability of a certain category, meaning every item in the output Softmax function has research value, not just the one with the highest probability as the result.

Finally, let us look forward to the new progress that the ACL 2020, with a submission deadline in December, will bring us.

References:

[1] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems, pp. 5998-6008.

[2] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171-4186.

[3] Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. 2015. Neural machine translation by jointly learning to align and translate. In Proceedings of the International Conference on Learning Representations.

[4] Sofia Serrano, and Noah A. Smith. 2019. Is Attention Interpretable. arXiv preprint arXiv:1906.03731.

[5] Sarthak Jain and Byron C. Wallace. 2019. Attention is not Explanation. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers).

[6] Sarah Wiegreffe, and Yuval Pinter. 2019. Attention is not not Explanation. arXiv preprint arXiv:1908.04626.

[7] Zichao Yang, Diyi Yang, Chris Dyer, Xiaodong He, Alex Smola, and Eduard Hovy. 2016. Hierarchical attention networks for document classification. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies.

[8] Yoon Kim. 2014. Convolutional neural networks for sentence classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing.

[9] Zachary C Lipton. 2016. The mythos of model interpretability. arXiv preprint arXiv:1606.03490.

References:

NAACL 2019 “Attention is Not Explanation”

ACL 2019 “Is Attention Interpretable?”

EMNLP 2019 “Attention is Not Not Explanation”

(As Value) is the output tensor at the i-th position from the previous layer; if the previous layer is a bidirectional RNN structure, then

(As Value) is the output tensor at the i-th position from the previous layer; if the previous layer is a bidirectional RNN structure, then ;

;  is the key (Key) at the i-th position, calculated from the value (Value) through a fully connected layer;

is the key (Key) at the i-th position, calculated from the value (Value) through a fully connected layer;  is the query (Query) of this Attention layer, randomly initialized and synchronized during training. If Attention is not a standalone layer but is built on the decoder, then

is the query (Query) of this Attention layer, randomly initialized and synchronized during training. If Attention is not a standalone layer but is built on the decoder, then  is related to the output at the corresponding position during the encoding phase; the similarity function is the matrix dot product, and the resulting

is related to the output at the corresponding position during the encoding phase; the similarity function is the matrix dot product, and the resulting  is the Attention weight, and

is the Attention weight, and  is the output of the Attention layer.

is the output of the Attention layer.

and

and  are two different output result distributions.

are two different output result distributions.

and

and  are two different distributions, which can be either output results or Attention weights,

are two different distributions, which can be either output results or Attention weights,

, where

, where  is the distribution of the normal result,

is the distribution of the normal result,  is the result distribution after nullifying the highest weight’s corresponding position. To verify how much this distance is, a random position is re-selected

is the result distribution after nullifying the highest weight’s corresponding position. To verify how much this distance is, a random position is re-selected , and the same process is used to nullify the intermediate representation, obtaining the corresponding JSD metric

, and the same process is used to nullify the intermediate representation, obtaining the corresponding JSD metric . At this point, we can use

. At this point, we can use  , the larger the value should be. The charts presented by the author in the article, as shown in Figure 4 on the left, have the x-axis as the difference between the two weights and the y-axis as

, the larger the value should be. The charts presented by the author in the article, as shown in Figure 4 on the left, have the x-axis as the difference between the two weights and the y-axis as  value. The author states that there are almost no negative results in the data obtained, and even if there are, they tend to be close to zero. Additionally, as the difference between the two weights increases, the degree of change in the model’s output distribution also increases correspondingly. However, the author believes that “even if the difference between the two weights reaches a large extent of 0.4 (weights satisfy sum normalization), most positive

value. The author states that there are almost no negative results in the data obtained, and even if there are, they tend to be close to zero. Additionally, as the difference between the two weights increases, the degree of change in the model’s output distribution also increases correspondingly. However, the author believes that “even if the difference between the two weights reaches a large extent of 0.4 (weights satisfy sum normalization), most positive  values are still very close to zero,” hence preliminarily concluding that the Attention mechanism has phenomena contrary to intuition.

values are still very close to zero,” hence preliminarily concluding that the Attention mechanism has phenomena contrary to intuition.

and randomly selected positions

and randomly selected positions to observe the situation of model decision behavior reversal, obtaining experimental data as shown in Figure 5, where the orange area indicates the cases that led to reversal, while the blue area indicates the opposite. However, in most cases, the model decision does not reverse, meaning that this experiment is somewhat ineffective, or further, simply nullifying an intermediate representation does not affect the robustness of the Attention layer, especially when there is an encoder with contextual relevance.

to observe the situation of model decision behavior reversal, obtaining experimental data as shown in Figure 5, where the orange area indicates the cases that led to reversal, while the blue area indicates the opposite. However, in most cases, the model decision does not reverse, meaning that this experiment is somewhat ineffective, or further, simply nullifying an intermediate representation does not affect the robustness of the Attention layer, especially when there is an encoder with contextual relevance.

represents a precise definition of a small value, i.e., using the constructed weights to make the model’s decision and the decision using original weights to achieve the maximum TVD distance;

represents a precise definition of a small value, i.e., using the constructed weights to make the model’s decision and the decision using original weights to achieve the maximum TVD distance;  represents the weight distribution of the i-th group constructed, while

represents the weight distribution of the i-th group constructed, while  is the model result obtained using the i-th group of constructed weights. The optimization objective is to generate the Attention weights of the groups while ensuring that the results produced by each group of weights do not exceed the TVD distance, maximizing the JS distance between each group of weights and the original weights, as well as between each group of weights themselves. The specific implementation of the optimization objective is

is the model result obtained using the i-th group of constructed weights. The optimization objective is to generate the Attention weights of the groups while ensuring that the results produced by each group of weights do not exceed the TVD distance, maximizing the JS distance between each group of weights and the original weights, as well as between each group of weights themselves. The specific implementation of the optimization objective is , where λ is a hyperparameter set to 500 during training.

, where λ is a hyperparameter set to 500 during training. Figure 11 Histogram of JS distance distributions under constraints

Figure 11 Histogram of JS distance distributions under constraints

, where

, where  is the model using original weights, and

is the model using original weights, and  is the model using adversarial weights. As shown in Figure 18, it is indeed possible to obtain effective adversarial weights, but it has also been proven in the previous experiment that these weights merely exploit vulnerabilities of the model and do not discover more important information.

is the model using adversarial weights. As shown in Figure 18, it is indeed possible to obtain effective adversarial weights, but it has also been proven in the previous experiment that these weights merely exploit vulnerabilities of the model and do not discover more important information.