Click the card below to follow Computer Vision Daily.

AI/CV heavy content delivered promptly.

Click to enter—>【CV Technology】 WeChat group

Scan to join the CVer Academic Circle, to gain access to the latest top conference/journal paper ideas and materials from beginner to advanced in CV, as well as cutting-edge projects and applications! Highly recommended for publishing papers and conducting research!

Reprinted from: Machine Heart

Lightning Attention-2 is a new type of linear attention mechanism that aligns the training and inference costs of long sequences with those of a 1K sequence length.

The limitations of sequence length in large language models significantly restrict their applications in artificial intelligence, such as multi-turn dialogues, long text understanding, and processing and generating multimodal data. The fundamental reason for this limitation lies in the quadratic computational complexity relative to sequence length inherent in the Transformer architecture used by current large language models. This means that as the sequence length increases, the required computational resources grow exponentially. Efficiently processing long sequences has always been one of the challenges for large language models.

Previous methods often focused on enabling large language models to adapt to longer sequences during the inference phase. For example, using Alibi or similar relative position encoding methods to allow the model to adapt to different input sequence lengths, or employing interpolation methods on RoPE and similar relative position encodings for further fine-tuning of already trained models to achieve extended sequence lengths. These methods only endow large models with some capability for long sequence modeling, but they do not reduce the actual training and inference overhead.

The OpenNLPLab team attempts to solve the long sequence problem in large language models once and for all. They proposed and open-sourced Lightning Attention-2—a new type of linear attention mechanism that aligns the training and inference costs of long sequences with those of a 1K sequence length. Before encountering GPU memory bottlenecks, infinitely increasing the sequence length does not negatively impact the model’s training speed. This makes infinite length pre-training possible. At the same time, the inference cost for super long texts is consistent with or even lower than that of 1K tokens, which will greatly reduce the current inference costs of large language models. As shown in the figure below, at model sizes of 400M, 1B, and 3B, as the sequence length increases, the training speed of LLaMA powered by FlashAttention2 begins to decline rapidly, while the speed of TansNormerLLM powered by Lightning Attention-2 remains virtually unchanged.

-

Paper: Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in Large Language Models

-

Paper link: https://arxiv.org/pdf/2401.04658

-

Open-source link: https://github.com/OpenNLPLab/lightning-attention

Introduction to Lightning Attention-2

Maintaining consistent pre-training speeds for large models across different sequence lengths sounds like an impossible task. In fact, if an attention mechanism’s computational complexity remains linear relative to the sequence length, this can be achieved. Since the emergence of linear attention in 2020【https://arxiv.org/abs/2006.16236】, researchers have been striving for the practical efficiency of linear attention to match its theoretical linear computational complexity. Until 2023, most work on linear attention focused on aligning its accuracy with that of Transformer models. Finally, in mid-2023, the improved linear attention mechanism【https://arxiv.org/abs/2307.14995】 can align in accuracy with state-of-the-art Transformer architectures. However, the key computational trick of turning left multiplication into right multiplication to achieve linear computational complexity in linear attention (as shown in the figure below) is far slower in practical implementation than direct left multiplication algorithms. This is due to the right multiplication implementation requiring cumulative summation (cumsum) involving numerous loop operations, and the extensive I/O operations make right multiplication’s efficiency much lower than left multiplication.

To better understand the ideas behind Lightning Attention-2, let’s first review the calculation formula of traditional softmax attention: O=softmax ((QK^T)⊙M_) V, where Q, K, V, M, O represent the query, key, value, mask, and output matrix respectively. Here, M is a lower triangular matrix of all 1s in unidirectional tasks (like GPT), and can be ignored in bidirectional tasks (like Bert), meaning bidirectional tasks do not have a mask matrix.

The author summarizes the overall idea of Lightning Attention-2 into three points for explanation:

1. One of the core ideas of Linear Attention is to eliminate the computationally expensive softmax operator, allowing the attention calculation formula to be written as O=((QK^T)⊙M_) V. However, due to the presence of the mask matrix M in unidirectional tasks, this form can still only perform left multiplication, thus not achieving O(N) complexity. However, for bidirectional tasks, since there is no mask matrix, the calculation formula for Linear Attention can be further simplified to O=(QK^T) V. The brilliance of Linear Attention lies in the fact that by simply utilizing the associative property of matrix multiplication, its calculation formula can be further transformed into: O=Q (K^T V), which is referred to as right multiplication, while the former is left multiplication. As shown in Figure 2, it can be intuitively understood that Linear Attention can achieve an appealing O(N) complexity in bidirectional tasks!

2. However, as the decoder-only GPT model gradually becomes the standard for LLMs, how to leverage the right multiplication feature of Linear Attention to accelerate unidirectional tasks has become an urgent challenge. To address this, the authors propose a “divide and conquer” approach, dividing the calculation of the attention matrix into diagonal and non-diagonal forms and adopting different methods for their calculations. As shown in Figure 3, Linear Attention-2 utilizes the commonly used tiling concept in computer science, splitting the Q, K, V matrices into an equal number of blocks. The computation within each block (intra-block) retains the left multiplication approach due to the presence of the mask matrix, maintaining O(N^2) complexity; while the computation between blocks (inter-block) can use right multiplication due to the absence of the mask matrix, thus achieving O(N) complexity. After completing the calculations for both, the corresponding Linear Attention output Oi for the i-th block can be obtained by direct addition. Additionally, by using cumulative summation on the KV state, it can be utilized in the computation of the next block. This results in the overall Lightning Attention-2 algorithm complexity being a trade-off between intra-block O(N^2) and inter-block O(N). The better trade-off is determined by the tiling block size.

3. Observant readers will notice that the above process only covers the algorithmic part of Lightning Attention-2; the reason it is named Lightning is that the author fully considers the efficiency of this algorithmic process during GPU hardware execution. Inspired by the FlashAttention series of works, when performing calculations on the GPU, the author transports the split Q_i, K_i, V_i tensors from the slower, larger capacity HBM inside the GPU to the faster, smaller capacity SRAM for computation, thereby reducing a significant amount of memory I/O overhead. After the block completes the Linear Attention calculation, its output O_i is transferred back to HBM. This process is repeated until all blocks are processed.

Readers interested in more details can carefully read Algorithm 1 and Algorithm 2 in this article, as well as the detailed derivation process in the paper. The algorithms and derivations differentiate between the forward and backward processes of Lightning Attention-2, helping readers gain a deeper understanding.

To better understand the ideas behind Lightning Attention-2, let’s first review the calculation formula of traditional softmax attention: O=softmax ((QK^T)⊙M_) V, where Q, K, V, M, O represent the query, key, value, mask, and output matrix respectively. Here, M is a lower triangular matrix of all 1s in unidirectional tasks (like GPT), and can be ignored in bidirectional tasks (like Bert), meaning bidirectional tasks do not have a mask matrix.

The author summarizes the overall idea of Lightning Attention-2 into three points for explanation:

1. One of the core ideas of Linear Attention is to eliminate the computationally expensive softmax operator, allowing the attention calculation formula to be written as O=((QK^T)⊙M_) V. However, due to the presence of the mask matrix M in unidirectional tasks, this form can still only perform left multiplication, thus not achieving O(N) complexity. However, for bidirectional tasks, since there is no mask matrix, the calculation formula for Linear Attention can be further simplified to O=(QK^T) V. The brilliance of Linear Attention lies in the fact that by simply utilizing the associative property of matrix multiplication, its calculation formula can be further transformed into: O=Q (K^T V), which is referred to as right multiplication, while the former is left multiplication. As shown in Figure 2, it can be intuitively understood that Linear Attention can achieve an appealing O(N) complexity in bidirectional tasks!

2. However, as the decoder-only GPT model gradually becomes the standard for LLMs, how to leverage the right multiplication feature of Linear Attention to accelerate unidirectional tasks has become an urgent challenge. To address this, the authors propose a “divide and conquer” approach, dividing the calculation of the attention matrix into diagonal and non-diagonal forms and adopting different methods for their calculations. As shown in Figure 3, Linear Attention-2 utilizes the commonly used tiling concept in computer science, splitting the Q, K, V matrices into an equal number of blocks. The computation within each block (intra-block) retains the left multiplication approach due to the presence of the mask matrix, maintaining O(N^2) complexity; while the computation between blocks (inter-block) can use right multiplication due to the absence of the mask matrix, thus achieving O(N) complexity. After completing the calculations for both, the corresponding Linear Attention output Oi for the i-th block can be obtained by direct addition. Additionally, by using cumulative summation on the KV state, it can be utilized in the computation of the next block. This results in the overall Lightning Attention-2 algorithm complexity being a trade-off between intra-block O(N^2) and inter-block O(N). The better trade-off is determined by the tiling block size.

3. Observant readers will notice that the above process only covers the algorithmic part of Lightning Attention-2; the reason it is named Lightning is that the author fully considers the efficiency of this algorithmic process during GPU hardware execution. Inspired by the FlashAttention series of works, when performing calculations on the GPU, the author transports the split Q_i, K_i, V_i tensors from the slower, larger capacity HBM inside the GPU to the faster, smaller capacity SRAM for computation, thereby reducing a significant amount of memory I/O overhead. After the block completes the Linear Attention calculation, its output O_i is transferred back to HBM. This process is repeated until all blocks are processed.

Readers interested in more details can carefully read Algorithm 1 and Algorithm 2 in this article, as well as the detailed derivation process in the paper. The algorithms and derivations differentiate between the forward and backward processes of Lightning Attention-2, helping readers gain a deeper understanding.

Accuracy Comparison of Lightning Attention-2

Researchers first compared the accuracy difference between Lightning Attention-2 and Lightning Attention-1 on a small-scale (400M) parameter model, as shown in the figure below, with little difference between the two.

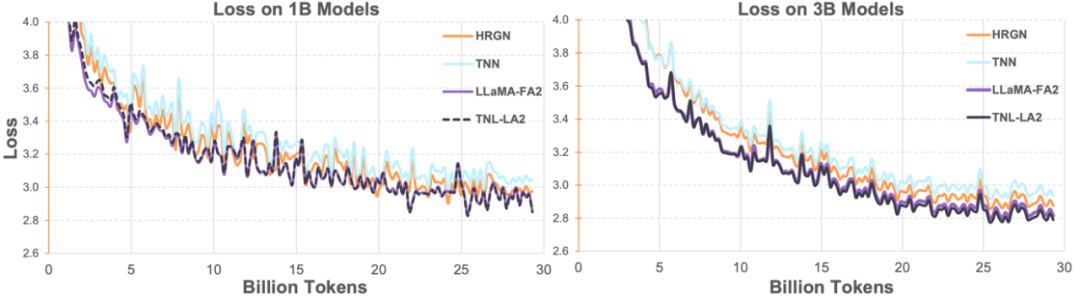

Subsequently, researchers compared the TransNormerLLM (TNL-LA2) powered by Lightning Attention-2 with other advanced non-Transformer architectures and LLaMA powered by FlashAttention2 under the same corpus on 1B and 3B models. As shown in the figure below, TNL-LA2 maintains a similar trend to LLaMA, with better loss performance. This experiment indicates that Lightning Attention-2 exhibits accuracy performance comparable to state-of-the-art Transformer architectures in language modeling.

In large language model tasks, researchers compared the TNL-LA2 15B with Pythia under similar model sizes on common benchmarks. As shown in the table below, under the condition of consuming the same tokens, TNL-LA2 slightly outperforms the Pythia model based on softmax attention in common sense reasoning and multiple-choice comprehensive abilities.

Speed Comparison of Lightning Attention-2

Researchers compared the single-module speed and memory usage of Lightning Attention-2 with FlashAttention2. As shown in the figure below, compared to Lightning Attention-1 and FlashAttention2, Lightning Attention-2 exhibits strictly linear growth in speed relative to sequence length. In terms of memory usage, all three show similar trends, but Lightning Attention-2 has lower memory usage. This is because the memory usage of FlashAttention2 and Lightning Attention-1 is also approximately linear.

The author notes that this article mainly focuses on solving the training speed of linear attention networks and achieving training speeds for arbitrary lengths of long sequences similar to those of 1K sequences. There is not much introduction regarding inference speed. This is because linear attention can be losslessly transformed into RNN mode during inference, thereby achieving a similar effect, i.e., the inference speed for a single token remains constant. For Transformers, the current token’s inference speed is related to the number of previous tokens.

The author tested the inference speed comparison between TransNormerLLM-7B powered by Lightning Attention-1 and common 7B models. As shown in the figure below, at approximately the same parameter size, the throughput speed of Lightning Attention-1 is 4 times that of Baichuan and more than 3.5 times that of ChatGLM, demonstrating excellent advantages in inference speed.

Lightning Attention-2 represents a significant advancement in linear attention mechanisms, enabling it to perfectly replace traditional softmax attention in terms of both accuracy and speed, providing sustainable scalability for increasingly larger models, and offering a pathway to efficiently handle infinitely long sequences. The OpenNLPLab team will continue to research sequence parallel algorithms based on linear attention mechanisms to address the current memory bottleneck issues.

Click to enter—>【Computer Vision and Paper Submission】 group

Drawing tool download

Reply in the background: Drawing tool to download the tool for drawing neural network structures!

PyTorch learning material download

Reply in the background: PyTorch materials to access the most comprehensive PyTorch beginner and practical materials!

Paper submission and Transformer group established

Scan the QR code below, or add WeChat: CVer444 to join the CVer paper submission or Transformer WeChat group. Additionally, other vertical directions covered include: object detection, image segmentation, object tracking, face detection & recognition, OCR, pose estimation, super-resolution, SLAM, medical imaging, Re-ID, GAN, NAS, depth estimation, autonomous driving, lane detection, model pruning & compression, denoising, dehazing, deraining, style transfer, remote sensing images, action recognition, video understanding, image fusion, image retrieval, paper submission & communication, PyTorch, TensorFlow, and Transformer, etc.

Be sure to note: research direction + location + school/company + nickname (e.g., paper submission or Transformer + Shanghai + Shanghai Jiaotong University + Kaka); following this format will expedite your approval and invitation to the group.

▲ Scan or add WeChat ID: CVer444 to join the group

CVer computer vision (knowledge circle) is here! If you want to learn about the latest, fastest, and best CV/DL/AI paper updates, quality practical projects, cutting-edge AI industry news, and materials from beginner to advanced learning tutorials, please scan the QR code below to join CVer computer vision, which has gathered thousands of people!

▲ Scan to join the circle

Organizing is not easy, please like and share.